Parallelizing a Neural Network Model of Systems Memory Consolidation & Reconsolidation

Grace Dessert and Minhaj Mussain, April 2022

We present two methods for parallelizing a neural network model of systems memory consolidation and reconsolidation, originally described in (Helfer & Shultz, 2020), and contrast performance in deployment up to 256 tasks across 4 nodes on Stampede2 (Texas Advanced Computing Center (TACC), The University of Texas at Austin).

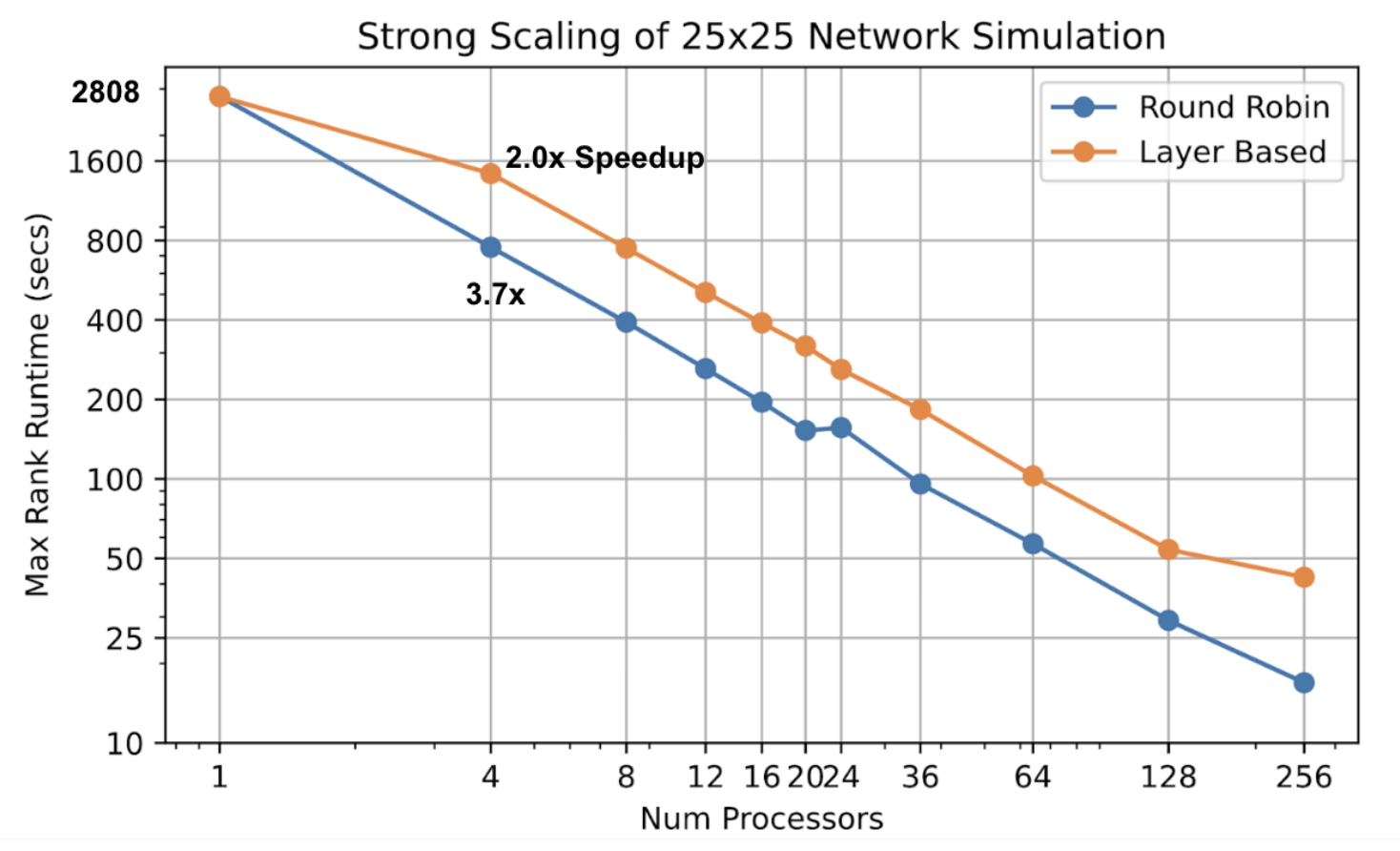

Using two parallelization approaches for this network model, we successfully decreased the walltime of large network simulations, achieving a maximum of 165x speedup (17 sec from 2808 sec) with 256 processors on a 25x25 neuron-per-layer model with the round-robin implementation. Furthermore, our parallel implementations have consistent and strong parallel efficiency between 4 and 256 tasks at about 80% and 40% for the round-robin and layer based implementations respectively. From our weak scaling trials, we were also able to drastically increase the number of neurons in the model that can be run in a feasible amount of time (1600 neuron-per-layer simulation in 105 seconds using the round-robin implementation). This demonstrates that our parallelization methodology was very successful in enabling larger network simulations of Helfer and Shultz’s model of systems memory consolidation and reconsolidation.

Check out the GitHub repository